Archive for the ‘SAS’ Category

As you may remember when SATA drive technology came around several years ago, it was a very exciting time. This new low cost, high-capacity, commodity disk drive revolutionized the home computer data storage needs.

This fueled the age of the digital explosion. Digital photos and media quickly filled hard drives around the world and affordably. This digital explosion propelled companies like Apple and Google into the hundreds of billions in revenue. This also propelled the explosive data growth in the enterprise.

The SAN industry scrambled to meet this demand. SAN vendors such as EMC, NetApp and others saw the opportunity to move into a new market using these same affordable high-capacity drives to quench the thirst for storage.

The concept of using SATA drives in a SAN went mainstream. Companies that once could not afford a SAN can now buy a SAN with larger capacities for a fraction of the cost of a traditional SAN. This was so popular that companies bought SATA based SANs by the bulk, often in multiple batches at a time.

As time progressed, these drives started failing. SATA was known for their low MTBF (mean time before failure) rates. SATA SANs employed RAID 5 at first to provide protection for a single drive failure, but not for dual drive failure.

As companies started to employ RAID 6 technology dual drive failure protection would not result in data loss.

The “Perfect Storm” even with RAID 6 protection looks like this…

– Higher Capacity Drives = longer rebuild times: The industry has released 3TB drives. Depending on SAN vendor, this will vary. I have seen 6 days for a rebuild of a 2TB drive

– Denser Array Footprint = increased heat and vibrations: Dramatically reducing MTBF

– Outsourced drive manufacturing to third world countries = increase rate in drive failures particularly in batches or series: Quality control and management is lacking in outsourced facilities resulting in mass defects

– Common MTBF in Mass Numbers = drives will fail around the same time: This is a statistical game. For example, a 3% failure rate for a SAN in a datacenter is acceptable, but when there are mass quantities of these drives, 3% will approach and/or exceed the fault tolerant of RAID

Virtualized Storage = Complexity in recovery : Most SAN vendors now have virtualized storage, but recovery will vary depending on how they do their virtualization

– Media Errors on Drives = Failure to successfully rebuild RAID volumes: The larger the drive the chance of media errors become greater. Media errors are errors that are on the drive that renders small bits of data to be unreadable. Rebuild of RAID volumes may be compromised or failed due to these errors.

Don’t be fooled into having a false sense of security but having just RAID 6. Employ good backups and data replication as an extension of a good business continuity or disaster recovery plan.

As the industry moves to different technologies other new and interesting anomalies will develop.

In technology, life is never a dull moment.

Posted by yobitech on February 25, 2012 at 10:13 pm under Backup, General, RAID, SAN, SAS.

Comments Off on The Perfect Storm.

If you are like me, I like to have the latest and greatest technology. From iPads to smart phones, I just love this stuff. If I am honest with myself, I can truly say I really don’t NEED all this stuff. It is just nice having…

Like many others, (mostly guys) we are similar in this way. We look at data storage similarly. We like to own the fastest drives, the smartest arrays and best-in-class technologies. In today’s tough economy, companies are looking for their IT department to do more with the technology. Leverage existing equipment and investments and to buy only if it gives them a competitive advantage. Many companies are holding off from making purchases and when they do make the investment, it is typical for them to stretch their 3 years maintenance contracts to 5 years.

Let’s face it, data is exploding and to store it is just darn expensive! The upfront costs can be very high. The golden question to answer is do I really need the latest and greatest? In a world if money was not a factor, I would have SSDs in all my devices and in my SAN, but unfortunately cost is a factor. SSDs are used mainly for very specific purposes like extending cache in a SAN or used for latency sensitive applications such as databases and virtual desktop systems. The other 95% of applications are sufficient using rotating media.

SAS, FC, SATA, PATA, SCSI hard drives

With the evolution of disk drives, it can be confusing. Especially if you are new to the industry. We hear a lot about SAS drives these days, but what does it mean? What is the difference between SAS, FC, SATA and SCSI hard drives? Mechanically, not a lot, with the exception of bearings and electronics that affect the MTBF (Mean Time Before Failure), it is mostly the drive interface.

Below are the interface types that are most common on today’s hard drives.

SAS – Serial Attached SCSI: The replacement for SATA and FC. Based on the SCSi command set, SAS is capable of 4x6Gb or 4x3Gb channels of I/O simultaneously.

FC – Fibre Channel: Commonly used in SAN systems as high-end, enterprise class hard drives.

SATA – Serial Advanced Technology Attachment: A commonly used interface in consumer grade hard drives. This was a replacement for ATA (AT Attachment, aka EIDE) and PATA (Parallel AT Attachment) drives.

SCSI – Small Computer Systems Interface: is a set of standards for peripheral connection to computer systems. This is the most common type among all business-class hard drives.

The bottom line is, there are alternatives. SATA and SCSI when architected correctly can support a major part of your data. So before you go out and make a considerable investment, ask the question, “Do I need the latest and greatest?”

Posted by yobitech on May 23, 2011 at 3:44 pm under SAS, SCSI, SSD.

Comment on this post.

People often ask me the question, ”What’s the difference between a Seagate 1TB 7.2k drive and a Western Digital 1TB 7.2k drive?” and I usually say the manufacturer…

Other than some differences in the mechanism, electronics and caching algorithms, generally, there is not much that is different between hard drives.

Hard drives are essentially physical blocks of storage with a designated capacity, set rotational speed and a connection interface (SCSI, FC, SAS, IDE, SATA, etc…). Hard drive performance is usually measured in IOPS (Input/Output Per Second) for each hard drive. For example, a 15k RPM drive will yield about 175 IOPS per drive, while a 10k RPM drive will yield about 125 IOPS per drive.

In the business setting, most companies store their information on a SAN (Storage Area Network). A SAN is also known as an intelligent storage array. An intelligent storage array is commonly made up of 1 or more controllers (aka, Storage Processors or SP) controlling groups of disk drives. In the SAN, there is intelligence. They are in the controllers (SPs).

This “Intelligence” is the secret sauce for each storage vendor. EMC, NetApp, HP, IBM, Dell, etc. are examples of SAN vendors and each will vary on the intelligence and capabilities in their controllers. Without this intelligence, these groups of disks are known as JBOD (Just a Bunch of Disks). I know, I know, I don’t make these acronyms up.

Disks that are organized in a SAN work together collectively to yield the IOPS necessary to produce the backend performance to provide the service levels driven by the application. For example, an application may demand 500 IOPS on average, how many disk drives do I need to adequately service this application? (This is an over simplified example for a sizing exercise, for there are many factors that come into play when it comes to sizing, for example, RAID type, read/write ratios, connection types, etc.) With each hard drive producing a set of set IOPS, is it possible to “squeeze” more performance out of the same set of hard drives? The answer is yes.

Remember the Merry-Go-Round? Why is it always more fun on the outside that the inside of the ride? We all knew as kids that we always want to be on the outside of the ride screaming because things seem to be faster. Not that the ride spun any faster, but it was because the farther out you were from the center, the more distance is covered in each revolution.

The same theory is true with hard drives. The outer tracks of the hard drive will always yield more data per revolution than the inside of the hard drive. More data means more performance. By utilizing the outer tracks of a hard drive to yield better performance is a technique known as “short stroking” the disk. This is a technique that is utilized in a few SAN manufacturers. One of the vendors that does this is Compellent (now Dell/Compellent). They call this feature “Fast Track”. Compellent is a pioneer in the virtual storage arena trail blazing next generation storage for the enterprise. Another vendor that does this is Pillar.

So at the end of the day, getting back to that question, “What’s the difference between a Seagate 1TB drive and a Western Digital 1TB drive?” For me, my answer is still the same… but it is how the disks are intelligently managed and utilized by will ultimately make the difference.

Posted by yobitech on April 20, 2011 at 11:09 am under General, NAS, SAN, SAS, SCSI.

Comment on this post.

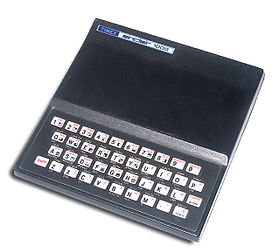

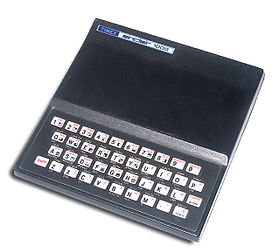

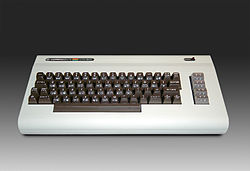

I remembered when I got my first computer. It was a Timex Sinclair.

Yes, the watch company and they made a computer. It had about 2k of RAM with a flimsy cellular buttoned keyboard. It had no hard drive or floppy disks, but it had a cassette tape player as the storage device. That’s right, the good old cassette tape. I bought a game on cassette tape with the computer back in the 80s and it took about 15 minutes to load the game. It was painful, but worth it.

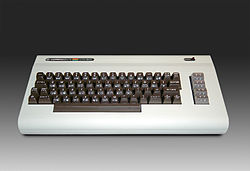

From there, I got a Commodore Vic20 with a 5.25” floppy drive. Wow, this was fast! From cassette to floppy disk seems like years ahead. I even used a hole-puncher to notch the side of the disk so I can use the flip side of the floppy. We didn’t have that many choices back then. Most games and applications loaded and ran right off of removable media.

I remember when hard drives first came out. It was very expensive and out of reach of the masses. A few years later, I saved up enough money to buy a 200MB hard drive by Western Digital. I paid $400 for that hard drive. It was worth every penny.

Since then, I was always impressed by how manufacturers were able to get more density on each disk every 6 months. Now with 3TB drives coming out soon, it never ceases to amaze me.

It is interesting how storage is going in 2 directions, Solid State Disk (SSD) and Magnetic Hard Disk (HD). If SSD is the next generation, then what does that mean for the HD? Will the HD disappear? Not for a while… This is because there are 2 factors: Cost and Application.

At the present time, a 120GB SSD is about the same cost as a 1TB drive. So it makes sense to go with a 1TB drive. Although this may be true from a capacity standpoint, the Application ultimately dictates what drive(s) are used to store the data. For example, although a 1TB drive may be able to store video for a video editor, the drive may be too slow for data to be processed. Same goes with databases where response time and latency is critical to the application.

Capacity simply will not cut it. This is when using multiple disks in a RAID (Redundant Array of Independent Disks) set will provide performance as well as capacity. SSDs can also be used because the access and response time of these drives are inherently faster.

As long as there is a price to capacity gap, the HD will be around during that time. So architecting storage today is a complex art for this dynamic world of applications.

I hope to use this blog as a forum to shed light on storage and where the industry is going.

Posted by yobitech on April 18, 2011 at 2:16 pm under Cloud, General, RAID, SAS, SCSI, SSD.

Comment on this post.

|